Ascii codepoints code#

An encoding maps a codepoint to a sequence of code units. Unicode is notable for having many encodings, including UTF-8, UTF-16 and UTF-32.

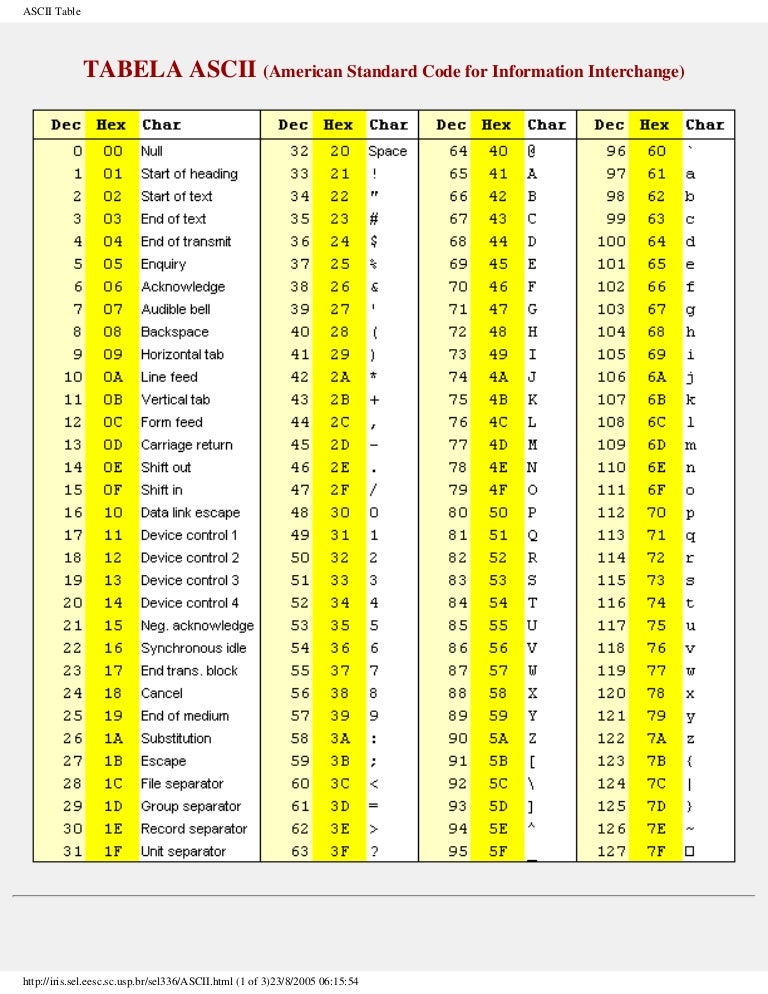

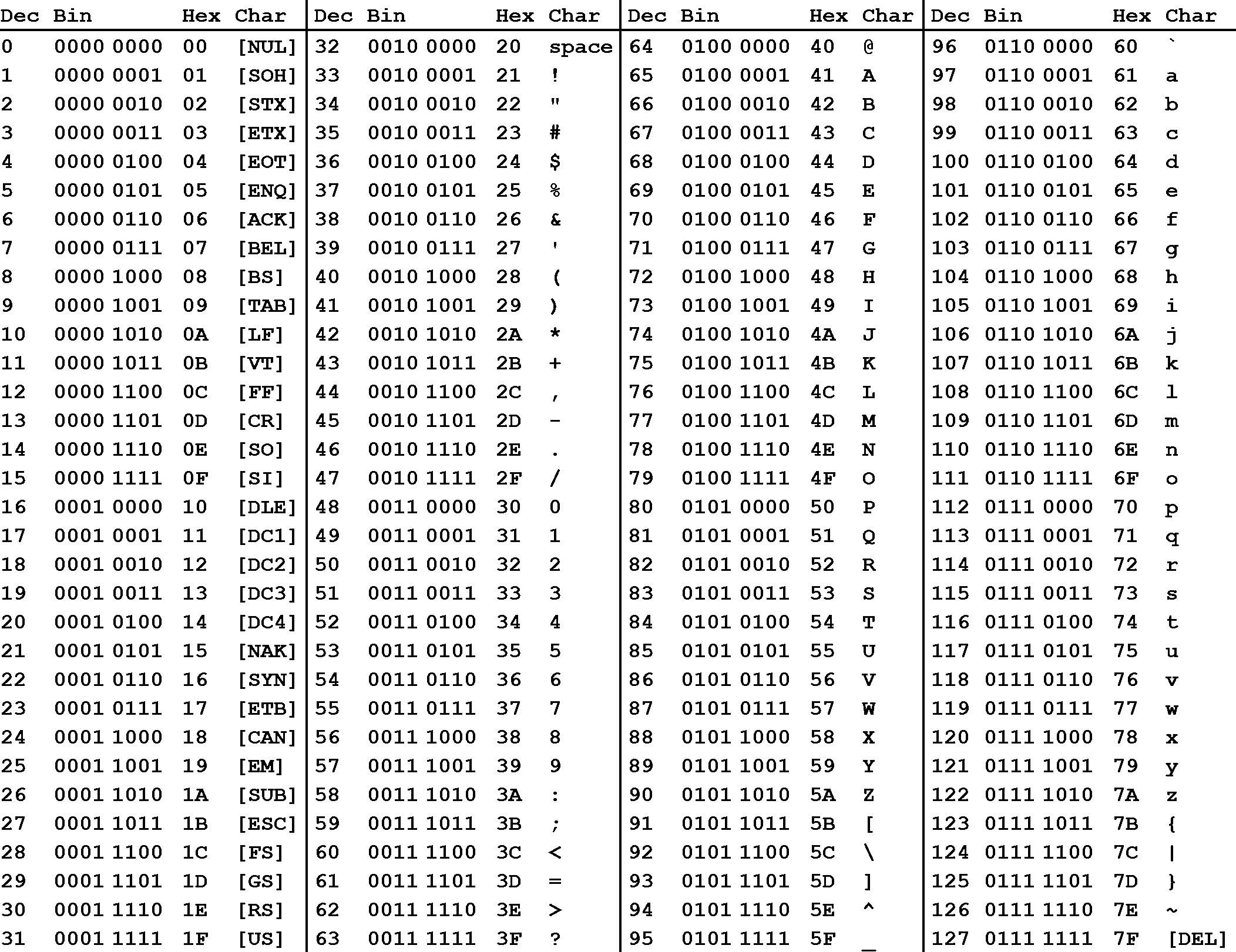

7-bit was used along with a scheme that has a parity bit in the 8th bit of a byte. But when discussing character sets and encodings in general, it's good to have all the concepts distinguished.Īctually, you could consider ASCII to have two encodings, one 7-bit and one 8-bit. Each concept doesn't necessarily require a discernable implementation for a particular encoding. Would also be possible or not? If I would have multiple encodings for an ASCII character the separation would be reasonable but with only one encoding form it doesn't make sense for me. So at least for ASCII it is not clear for me why the intermediate step (map to integer) is done. Why this separation of code points and encoding in ASCII? ASCII has only one encoding. Encode the character with a byte which has the same value (e.g.Assign a number (code point) to each character (e.g.If I summarize this I have the following steps to encode characters in ASCII: So 65 which is the code point for A would be encoded as 0100 0001.īecause I have 127 characters in ASCII there are 127 code points where each code point is always encoded by one byte. The character encoding defines how such an code point is represented via one ore more bytes.įor the good old ASCII the author says: "The character encoding specified by the ASCII standard is very simple, and the most obvious one for any character code where the code numbers do not exceed 255: each code number is presented as an octet with the same value.

The former is just an integer number which is assigned to an character. I found an interesting article "A tutorial on character code issues" ( ) which explains the terms "character code"/"code point" and "character encoding".

0 kommentar(er)

0 kommentar(er)